How I learned to stop worrying and love open science

An early career researcher's perspective on open science

Abstract

The open science (OS) movement provides a promising avenue of tackling many of the issues surfacing in research and publishing. However, when I first heard about open science and its wider implications, I felt a bit of resistance to the idea of getting involved myself. One of the reasons for this being that mainly established, senior scientists dominated the discussion about open science on Twitter, in the blogosphere and in traditional journalism.

In order to get a wider audience of early career researchers engaged and involved, we need to consider telling open science “through human stories”. In the following post I recount my experience of the replication crisis and which methods I am starting to implement in my daily research. Hopefully this will inspire other young scientists to consider implementing some of the freely available open science tools in their own research.

In 2011, around the time I started my journey into research, the replication crisis in Psychology was in full swing. Many fundamental findings turned out to be flukes, so much so, that Psychology textbooks will likely have to be rewritten.

I remember learning about ‘embodied cognition’ in one of my undergraduate classes. The theory poses that the state and context of our human body can have an influence on the mind, and that mind and body are not clearly separable, distinct entities.

Illustration by Lovisa Sundin.

My fellow classmates and I were just starting out in scientific research, so we were encouraged by our lecturers to split up into teams and, in the two hours of our class, were to set up a replication of an established effect. My team chose the facial-feedback hypothesis, an experiment originally conducted by Strack, Martin and Stepper (1988). In their seminal study, the investigators instructed participants to hold a pen between their teeth in one of two ways: one producing a frown, the other a smile (without explicitly asking participants to frown or smile, the same muscles would be engaged).

Strack and colleagues found that participants in the ‘smile’ group rated the same cartoons as funnier than the participants in the ‘frown’ group. Full of enthusiasm, me and my team of other first-time researchers divided our class into two groups, instructed them on how to hold the pens between their teeth and left them to watch and rate video clips from ‘The Simpsons’.

While I don’t remember if we managed to reproduce the findings of the original study, a recent large-scale effort by Eric-Jan Wagenmakers and colleagues (2016) failed to replicate the effect across 17 independent experiments. Of course, this doesn’t call into question the whole field of embodied cognition research, but it does cast serious doubts on the soundness of this and many other flashy, sensational findings, whose headlines you might have skimmed on Facebook or Buzzfeed.

Illustration by Lovisa Sundin.

Many more failed replication studies (documented by the Open Science Collaboration, Camerer and colleagues (2018) and the Many Labs project), scandals involving psychologists either entirely making up their data or analysing their results in so many different ways that they eventually find something to report (a questionable research practice now commonly known as ‘p-hacking’) have initiated a discussion in the field about the way we do our research, how we analyse our data and how we share it with other researchers and the world.

This reproducibility issue is not unique to the field of Psychology - Biology, the Medical Sciences and specifically Cancer Research are affected as well. However, the way in which researchers in Psychology have dealt with the fallout of the crisis is quite innovative. A new movement in the field has emerged, which is following the philosophy and tenets of Open Science.

As the funding body Research Councils UK (RCUK) state in their guiding principles, ‘publicly funded research data are a public good, produced in the public interest, which should be made openly available with as few restrictions as possible in a timely and responsible manner that does not harm intellectual property.’

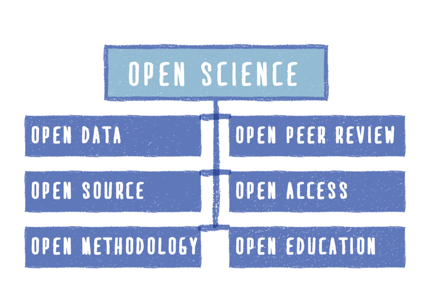

To phrase this differently, research should be transparent and accessible. These are the basic goals the open science movement wants to accomplish. It is important to note here, that paying large sums of money to enable open access to journal articles (i.e. ‘gold open access’) should not be confused with Open Science.

Not only should people (let’s be real, mainly fellow scientists researching the same niche thing you are interested in) be able to access your finished manuscript, but all other steps of the research process should be open and transparent as well, to ultimately improve the way science is done.

Illustration by Lovisa Sundin.

The Open Science movement has developed a set of tools to guide researchers in making every step of the way open, accessible and shareable. But what does this look like when put into practice?

Starting to implement Open Science practices step-by-step, I am pre-registering my hypotheses and analyses on aspredicted.org before I collect my data. This results in a time-stamped document, which I have the power to publish at any point in time after I finish an ongoing experiment. It is one possible approach to hold yourself accountable for when data analysis comes around and helps you navigate the muddy waters of differentiating between predictions and ideas that arose after you saw the data.

Moving away from expensive, corporate software, I am now using an openly available program for analysis and visualisation. And last but not least, I have just uploaded my very first preprint to an online repository (i.e. ‘green open access’), which allows absolutely everyone to read the first draft of my submitted manuscript. This ideally will enable peers (and enthusiastic, interested parents in my case) to give feedback and improve the manuscript, before the journal’s peer reviewing process even starts.

One of the advantages of publishing your research in scientific journals is exactly this: the peer reviewing process. Imagine how much the quality of your experiments would improve, if even before starting the data collection you could get feedback on the design and planned analyses. And on top of that, given that you follow the specified experiment plan, you would receive an in-principle acceptance from the journal. This means the journal would publish your results, even if they are unglamourous and do not fit with the main story of your field of research. Congratulations, your dreams have come true in form of the registered report (more information and resources below)!

The award-winning team of lecturers at the University of Glasgow (UofG) School of Psychology behind #PsyTeachR are fully embracing the Open Science movement and are teaching the next generation of researchers how to use R for reproducible data analysis. Many other labs, universities and global collaborations are following suit and are implementing open science practices as well.

I am in the very fortunate position that my PI (principal investigator), Professor Emily Cross, fully supports me in applying Open Science practices in my research. Our lab has included a statement on our website, specifying the rationale behind and the aspects of Open Science we seek to integrate. However, I realize that not everyone might find themselves in such a supportive lab environment. Dr Ruud Hortensius, postdoc in our lab and researcher at the UofG Institute for Neuroscience, recommends in such cases to reach out online: “Join Twitter! There is a large online community that helps early career researchers, can provide tips and tricks, arguments for Open Science, but also balanced arguments, on why it’s not always possible to share your data.”

Thank you Ruud for chatting with me about open science and special thanks to Lovisa for providing the artwork for this post! You can read more about Ruud’s research on his website, or connect with him on Twitter. More of Lovisa’s wonderful artwork can be found via her website, or you can check out her TEDx talk.

Title image by rawpixel on Unsplash.

References

Strack, F., Martin, L. L., & Stepper, S. (1988). Inhibiting and facilitating conditions of the human smile: a nonobtrusive test of the facial feedback hypothesis. Journal of personality and social psychology, 54(5), 768.

Wagenmakers, E. J., Beek, T., Dijkhoff, L., Gronau, Q. F., Acosta, A., Adams Jr, R. B., ... & Bulnes, L. C. (2016). Registered Replication Report: Strack, Martin, & Stepper (1988). Perspectives on Psychological Science, 11(6), 917-928.

Resources

The open science community is very much online-based, so there are many places where you can learn more, join the conversation or take advantage of freely accessible tools and data. This is a (non-exhaustive!) list to point you in the direction of this cornucopia of resources.

Learning about Open Science

-

Massive open online course (MOOC): ‘Improving your statistical inferences’

-

MOOC: ‘Open Science’

-

UofG Psychology’s Lisa DeBruine has some nifty slides on replication and generalisation

-

‘8 steps to open science: An Annotated Reading List’ by Crüwell and colleagues (2018)

Tools

-

Open stimulus sets and data: Figshare

-

Open code: Github

-

Open research projects: OSF

-

Preregistration via AsPredicted or OSF

-

Registered reports (participating journals, FAQs, etc) via the centre for open science

-

Resource for large scale replications ‘crowdsourcing’ the power of many labs: PsySciAcc

R for reproducible data analysis

-

UofG Psychology course on using R stats for reproducible methods and data analysis

-

Free stats textbook (with R)

Miscellanea

-

Join the online community on Twitter: #OpenScience, #rstats, #phdchat

-

Podcasts: Everything Hertz, The Black Goat Podcast, ReproducibiliTea

-

More infos on the replication crisis and open science: ‘Open Science is now the only way forward for psychology’ and ‘Open is not enough’

Originally published at uofgpgrblog.com on November 30, 2018.